- Static: the URL path is fixed and never changes, e.g. https://bitbucket.org/explore.

- Dynamic: the URL path is constructed dynamically and can include semantic information, e.g. https://bitbucket.org/jsmith/dotfiles/downloads, in this case jsmith is user, dotfiles a name of source repository, downloads - feature.

- SEO: localization and internationalization is sort of must have for modern web applications, can combine two above.

- Missing: that always happen, url changed and resource is not available anymore. What is impact of handing a non-existing path?

- bottle 0.11.6

- django 1.6

- falcon 0.1.7

- flask 0.10.1

- pylons 1.0.1

- pyramid 1.5a2

- tornado 3.1.1

- web2py 2.2.1

- wheezy.web 0.1.373

apt-get install make python-dev python-virtualenv \

mercurial unzip

The source code is hosted on bitbucket, let clone it into some directory and setup virtual environment (this will download all necessary package dependencies per framework listed above).

hg clone https://bitbucket.org/akorn/helloworld cd helloworld/02-routing && make envOnce environment is ready we can run benchmarks:

env/bin/python benchmarks.pyHere are raw numbers:

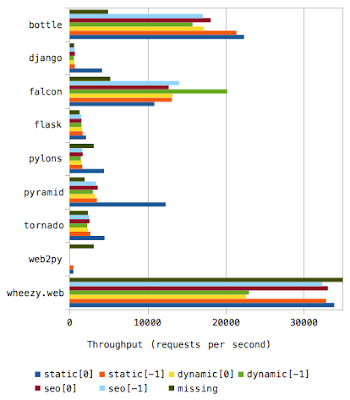

static[0] msec rps tcalls funcs bottle 4472 22364 66 33 django 23985 4169 186 91 falcon 9187 10885 95 26 flask 47296 2114 498 121 pylons 22621 4421 191 79 pyramid 8100 12345 65 49 tornado 22279 4489 187 68 web2py 19505 513 426 156 wheezy.web 2948 33920 26 24 static[-1] msec rps tcalls funcs bottle 4673 21397 66 33 django 147691 677 1778 91 falcon 7621 13121 76 26 flask 58801 1701 695 121 pylons 61109 1636 788 79 pyramid 28504 3508 463 49 tornado 37438 2671 386 68 web2py 19508 513 426 156 wheezy.web 3041 32881 26 24 dynamic[0] msec rps tcalls funcs bottle 5803 17233 70 36 django 158295 632 1945 90 falcon 7565 13219 75 26 flask 62888 1590 680 121 pylons 63505 1575 857 82 pyramid 30473 3282 511 51 tornado 42080 2376 446 79 web2py - - - - wheezy.web 4425 22598 36 32 dynamic[-1] msec rps tcalls funcs bottle 6341 15771 70 36 django 174482 573 2097 90 falcon 4946 20218 56 26 flask 65428 1528 697 122 pylons 69284 1443 914 82 pyramid 33526 2983 549 51 tornado 44404 2252 465 79 web2py - - - - wheezy.web 4348 22999 36 32 seo[0] msec rps tcalls funcs bottle 5524 18103 70 36 django 144856 690 1785 90 falcon 7871 12705 95 26 flask 65623 1524 741 122 pylons 58965 1696 794 82 pyramid 27653 3616 468 51 tornado 38529 2595 408 79 web2py - - - - wheezy.web 3023 33084 26 24 seo[-1] msec rps tcalls funcs bottle 5850 17094 70 36 django 149475 669 1857 90 falcon 7118 14049 86 26 flask 66575 1502 768 122 pylons 60901 1642 821 82 pyramid 29394 3402 486 51 tornado 39307 2544 417 79 web2py - - - - wheezy.web 3089 32371 26 24 missing msec rps tcalls funcs bottle 20255 4937 209 77 django 168316 594 2086 89 falcon 19112 5232 292 26 flask 76259 1311 837 134 pylons 31882 3137 270 85 pyramid 51121 1956 704 84 tornado 42408 2358 433 65 web2py 3195 3130 178 59 wheezy.web 2375 42108 21 20msec - a total time taken in milliseconds, rps - requests processed per second, tcalls - total number of call made by corresponding web framework, funcs - a number of unique functions used.

Samples

Each web site has sections that combine some features. Let consider a typical web site can have 10 sections with 20 features in each section. Routing is setup this way:

# static routing

/section0/feature0

/section0/feature1

...

/section9/feature19

# dynamic routing

/{user}/{repo}/feature0

...

/{user}/{repo}/feature19

# seo routing

/{locale}/feature0

...

/{locale}/feature19

# missing

/not-found

The benchmark capture the throughput for the first (e.g. /section0/feature0) and last item only (e.g. /section9/feature19).Note, you can run this benchmark using any version of python, including pypy. Here are make targets to use:

make env VERSION=3.3 make pypyEnvironment Specification:

- Intel Core 2 Duo @ 2.4 GHz x 2

- OS X 10.9, Python 2.7.6

Could you graph the evolution of req/seq over time ? Thanks.

ReplyDeleteThe numbers are captured by python standard module `timeit` (see benchmark.py:38), it doesn't provide metrics you requested.

DeleteI've been testing your benchmarks, with overhead of http, and I can not get results similar to yours. "wheezy" is fast, but in several cases bottle has better performance.

ReplyDeleteOf course there is not much difference between the results. In your first case, wheezy is 9 times faster than django. But testing on uwsgi directly, only 2 times faster.

I think your charts attempt to show something that is unrealistic.

https://gist.github.com/3866512

How about concurrency... you think 2 concurrent requests are realistic?

DeleteThe benchmark represents nominal numbers... you most likely run into network, CPU limitations (client or server). See previous post about web frameworks performance: there are several frameworks that runs almost at speed of plain simple WSGI application and difference is not visible, for that reason there is isolated benchmark to show effectivity... same applies to template engines... another post earlier.

No matter whether it is realistic or not realistic! In fact, if a server is with little power, could easily be a realistic configuration. What is needed is a deterministic scenario for all tests. If you have 2 or 20 processes processes, will demonstrate that uwsgi works well, but should not affect the results. Would scale linearly to the number of processes that can manage your server.

DeleteWith this in mind, it makes no sense to say that having two processes is unrealistic. The framwork should respond proportionally to the number of processes to run. Having one or many threads is not a parameter to be measured to a framework, is a parameter to be measured in a wsgi server, and I think this is not the case.

No sense show that a given framework 9 times better result than another, when you put the http layer above, the 9 become a 2. Furthermore, when comparing the ratio of the difference of performance decrease of other frameworks, you can see that the percentage of drop wheezy performance is more important than eg django or tornado.

All I'm trying to say is that the vast difference is unrealistic. I understand that as wheezy bottle are both faster than django or tornado or flask. But that difference is unreal, and I think it looks pretty clear.

Do not mean to offend or say that tests are imperfect. Sorry if it seemed so.

PS: I run same tests in different configurations. The results have been proportional.

In your test the apache benchmark concurrency is 2. See http://mindref.blogspot.com/2012/09/python-fastest-web-framework.html.

DeleteThe reason you do not see the difference is related to environment you are using. If the limit is in your network - you will see lower CPU load, etc. This test get rid of all these factors and just measure internal framework effectivity, no network, no web server, just plain WSGI call. That simple.

Okay, I understand your point. But I just have one question. Running a benchmark, as it is. without http, just testing the urldispatcher gives me worse results than using uwsgi with all the overhead of the HTTP layer and network layer, etc. ..?

DeleteIs this confuses me a lot.

The same test is performed using a benchmark and using uwsgi + ab.

In my opinion, a benchmark should work better, but it is not. Where I'm wrong?

https://gist.github.com/3868290

You are wrong in missing a fact that OS spent cycles on IO wait.

DeleteIsolated benchmark is executed on a single CPU core; even so you might get different results since kernel loop cores as process runs, however you can overcome this with `taskset` command to pin your process to concrete core... again Xeon processor shows better results here... longer test runs.

uwsgi can be tuned to use more processes, e.g. one process per CPU core, so your server is more useful. Take a look at server load (using a `top` or `htop` command), if it above 4.00 for 4 core server, you loaded your server quite well... higher number shows it overloaded.

It is not correct try compare both results, both serves different purpose. The first shows me the nominal possible difference between frameworks, while the second points to practically achievable results with given hardware, OS kernel and application server (some configuration tricks to both applies, e.g. max number of sockets, etc). That difference points me to the fact that bottleneck is not in web framework rather somewhere else lower in stack... that could be a client computer which is just unable to push more packets to server, etc.

https://groups.google.com/d/msg/django-developers/4h0eP_mBE8A/40ZnOiJwjQQJ

ReplyDeleteComments?

Could you be a bit more concrete with respect to comments you would like to hear, please? Just ask your question or voice concern if any.

DeleteThe google group post basically says it all:

ReplyDeleteNow that I've looked in detail at the test, it is because the test is

nonsensical. Each time it tests the URLs, it constructs a fresh WSGI

application. Each fresh application has to compile each URL in the

urlconf before using it. It then destroys the application, and starts

another one up.

The test works with module `app` located in a directory per framework. The load code is outside of timeit call.

Deletewheezy.web seo routing benchmark has been improved by approximately 40%.

ReplyDeleteI think it would be really interesting if you could describe why wheezy is faster. For example, I remember a talk (maybe it was linked from your template post?) showing benchmarks about the speed of different speed operations in python.

ReplyDeleteIn a similar way, it would be nice if you can take two or three frameworks and state why wheezy is faster. Is due to the implementation? Is because the features allow for better optimizations? It is because it has less features (maybe sensible ones)? This information would be really useful for people not only in the python web community.

Chris, thank you for the comment. That might be an idea for another post, but my idea was to get thing simple without spending much time in-depth analysis what is test, what is contributed, what is a sample, etc. The trend most likely remains.

ReplyDeleteThank you.

ReplyDeleteTest coverage tells me something, but not the split between code/tests/demos/etc.

ReplyDeleteYou are mistaken, I do not present a bigger code base as something negative. The problem is with number of PEP8 or CC errors that needs attention. The numbers, being normalized, gives you an idea regardless the code base size.

Where is Pylons ? :)

ReplyDeleteJust added.

Delete